Chronicling America’s Newspaper Biographies as Data

Daniel Evans is a PhD student at the University of Illinois - Urbana Champaign. His research uses natural language processing and machine learning to explore the history of the book, the history of computing, and digital archives. More recently, his work has been centered on equity and access, information retrieval, and cultural analytics in digital libraries.

In the fall of 2022, Chronicling America passed a major research milestone: the NEH-funded project announced that it had digitized 20 million newspaper pages and New Hampshire joined the program as the 50th state.

The project utilizes both structured and unstructured data to describe 2.7 million issues across the entirety of Chronicling America. The Library of Congress’s CONSER Program dictates the form and content of the cataloging metadata attached to each newspaper title. CONSER is the standard for all Chronicling America MARC records and for all other Library of Congress serials. Many institutions across the country also use CONSER standards for their serials cataloging, and it is helping to increase the interoperability of serials metadata, which is notoriously challenging both to create and maintain.

In addition to the highly structured MARC data, Chronicling America has another useful data feature not widely included in other digitized newspaper resources: title essays. These supplemental descriptions are about 500 words of prose, contributed by state partners. They provide a deeper context to the newspaper than is available via the CONSER metadata. As of this post, there are 3,680 title essays, and you can find them on the bottom right of the page for a given newspaper title.

As the Pathways Intern with NEH’s Division of Preservation & Access, I continued the work begun by previous NEH interns by focusing on the ways in which we could use the unstructured data of the title essays to complement and enhance the structured data of the CONSER catalog records. Previous interns started this work by making the editors and publishers of ethnic newspapers more prominent. By linking the individuals named in these title essays to the Library of Congress Named Authority File (LCNAF), they disambiguate and standardize the unique names of people. In turn, they made visible writers and editors who were historically marginalized and excluded from history. Their work in record enhancement improved search processes and gave people of color representation in LCNAF records by linking their names to their contributions.

This work complements other projects such as the Newspapers as Data project and Newspaper Navigator. These projects, combined with the work of my predecessors at NEH, served as an inspiration for thinking about ways to reimagine the NDNP title essays as packable, navigable data. They prompt us to reconsider the title essays as malleable data that could be a useful starting point for both computational analysis and as endpoints for bulk bibliographic updates. If newspapers can be data, then surely title essays can as well! I set out to improve the process of creating data sets from Chronicling America, and I am very pleased to share the results of my work.

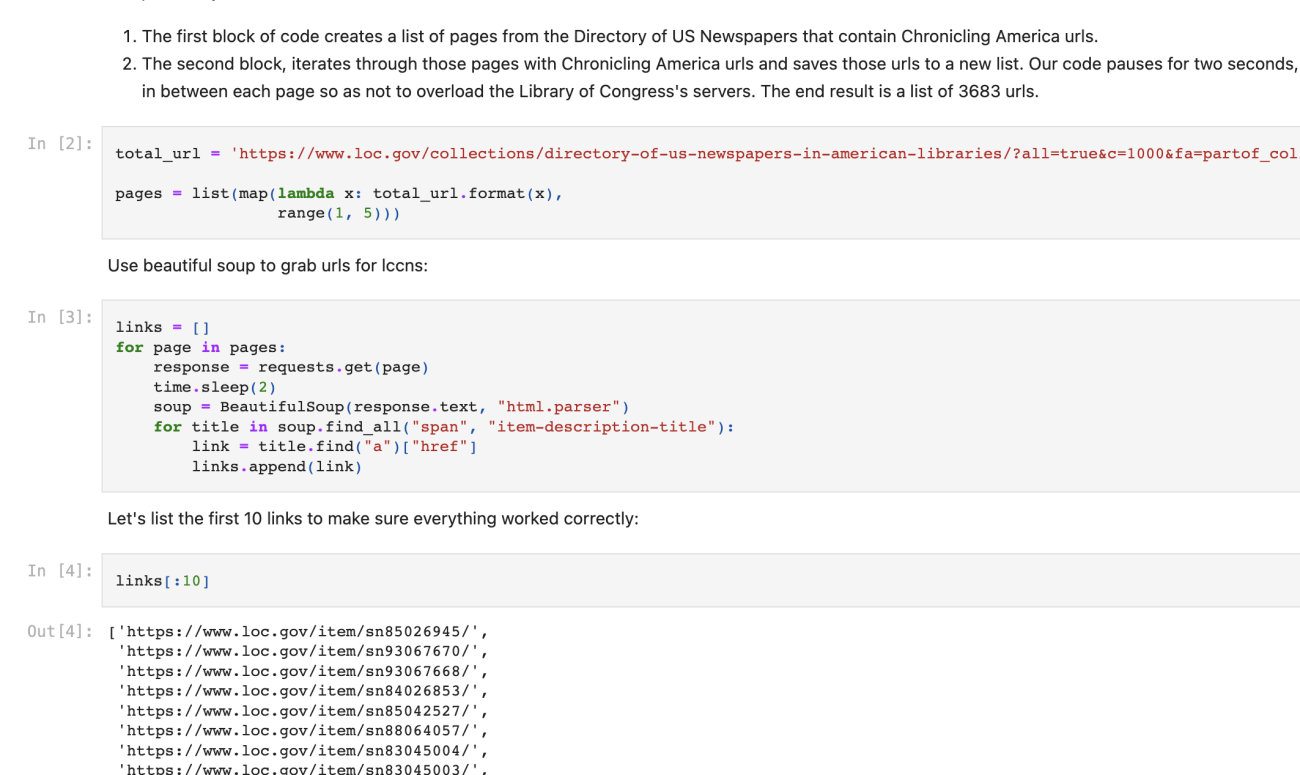

To create these data sets, I produced two Jupyter notebooks that downloaded Chronicling America title essays from the Library of Congress API. The first notebook creates a dataset based on both the structured MARC data and the unstructured title essays, including title, date created, dates of publication, description, title essay, title essay contributor, language, location, Library of Congress Control Numbers (LCCN), subject headings, newspaper title, and permalink URL. As it currently stands, the code retrieves all the Chronicling America title essay content and creates a CSV dataset of about 3,680 essays.

The second Jupyter notebook creates a point of entry for the analysis of these datasets by using basic natural language processing (NLP) techniques. Included in this notebook is code that allows researchers to extract named entities such as individuals, locations, and organizations from the title essays. While many elements in this notebook are experimental, the hope is that it will inspire researchers with avenues for further exploration of these title essay data sets.

These notebooks are meant to assist in drawing out research questions from American print culture. They act as starting points through which researchers can download all title essays and associated metadata, but also allow researchers to curate their own data sets through the selection of specific time periods, locations, or metadata fields. Furthermore, the code within them provides points for researchers to ask further questions from Chronicling America that are not immediately available in the current metadata or full-text search. The code within these notebooks can be adapted to ask such questions as:

- Which newspapers were published by African American printers in the American Southwest?

- Which newspapers were funded by the Grand Army of the Republic?

- Where were suffrage newspaper published? Is there a network of their publishers and printers?

- How do publication trends change during and after Reconstruction in the American South?

- What were the funding sources and topics of Eastern European newspapers in the American Midwest?

- Which parts of the country are most likely to preserve rural newspapers?

- Which Native American tribes are best represented in historic newspapers?

- Which towns in Puerto Rico produced weekly newspapers?

Both notebooks are written in Python and assume little-to-no programming experience. Accessibility for researchers with all levels of programming abilities is the emphasis of these notebooks. Their goal is to provide researchers with a downloadable data set of all the title essays and to give them starter code so that they can create their queries specific to their own research needs and interests. I followed minimal computing principles in producing code that requires no installation on a personal computer and thus can exist as snapshotted architecture independent of personal hardware or software constraints.

The Jupyter notebooks can be found here as part of the Library of Congress’s GitHub repository. We hope these notebooks will prove useful for both new and experienced programmers and researchers of NDNP, and we can’t wait to see how they might aid in the study and understanding of historical newspapers