Swati Srivastava is a political theorist who, with support from an NEH grant, is exploring the role of artificial intelligence in contemporary political systems. It is a big and often complicated subject, partly because the reach of artificial intelligence, or AI, is much greater than people realize.

AI has become thoroughly enmeshed in our way of life. It shapes the entertainment we are exposed to, how we date and whom, the security apparatus that protects our nation, and the financial system that keeps our economy humming. We can’t understand the American system today without giving it some thought.

Rather than ask Srivastava to explain everything about artificial intelligence, we asked her to define some of the key terms that appear in her work and that of other political thinkers writing on the subject. The result is a lexicon, a glossary of a handful of terms to aid us in our roles as citizens and humanists trying to understand the brave new world in which we live.

Algorithms:

Algorithms are the computational rules behind technologies like GPS navigation, online search, and content streaming. Previously, algorithms were designed by programmers who defined their rulesets. Today, machine learning also makes it possible for algorithms, immersed in large datasets, to develop their own rules. Machine learning may be supervised by training algorithms or left unsupervised to operate with fewer cues. For instance, a handwriting-detection algorithm may learn by being trained on a database of pre-labeled handwritten images or through immersion in a database where the algorithm clusters images based on its own pattern identification.

Algorithmic Governance:

Government and commercial entities use machine-learning algorithms to model predictions for everyday decisions in a range of domains related to crime, credit, health, advertising, immigration, and counterterrorism. For instance, risk-assessment algorithms aim to correlate people’s attributes and actions—such as college education, use of certain phrases in social media posts, or the composition of social networks—with classifications as more or less risky. The data involved are increasingly granular. A mobile app-based lender may use an applicant’s phone battery charge at the time of application in addition to payment history to infer creditworthiness. Thus, “algorithmic governance” refers to how governments and corporations rely on AI systems to collect and assess information and make decisions. Importantly, such governance is not limited to governments. When private health insurers use algorithms to set premiums based on risk assessments, they, too, participate in algorithmic governance. Even more expansively, critical geographer Louise Amoore writes in Cloud Ethics that as algorithms become integrated in public and private infrastructures, they establish “new patterns of good and bad, new thresholds of normality and abnormality, against which actions are calibrated.” In this vein, algorithms govern by deploying their own rules and defining for us what constitutes a border crossing or a social movement or a protest, supplying actionable intelligence that can be used to shape realities still in the process of becoming, such as throttling public transportation to keep a protest from growing larger.

Algorithmic Bias:

An emerging field of “critical algorithm studies” across the humanities is reckoning with the social harms of algorithmic governance, especially concerning bias and discrimination. In Race After Technology, Ruha Benjamin, an associate professor of African-American studies at Princeton University, identifies a “New Jim Code” through which developers “encode judgments into technical systems but claim that the racist results of their designs are entirely exterior to the encoding process.” UCLA professor Safiya Noble’s landmark Algorithms of Oppression highlighted that Google displayed more negative image results, including pornographic images, for Black women and girls than for white counterparts. Others observe Google algorithms displaying ads for highly paid jobs to men more than to women and YouTube algorithms delivering problematic autocomplete results or racist image-tagging systems.

In addition to discriminatory impact based on legally protected categories like race and gender, Orla Lynskey, associate professor of law at the London School of Economics, warns that algorithmic bias may lead to distinctions that also prejudice systems against legally nonprotected groups, for example, people of lower socioeconomic status. Despite concerns with algorithmic bias, in 2020, the U.S. Department of Housing and Urban Development passed a rule that insulates defendants against housing discrimination liability when using algorithms for making housing decisions. (The rule remains on the books but has not been enforced by the Biden administration.)

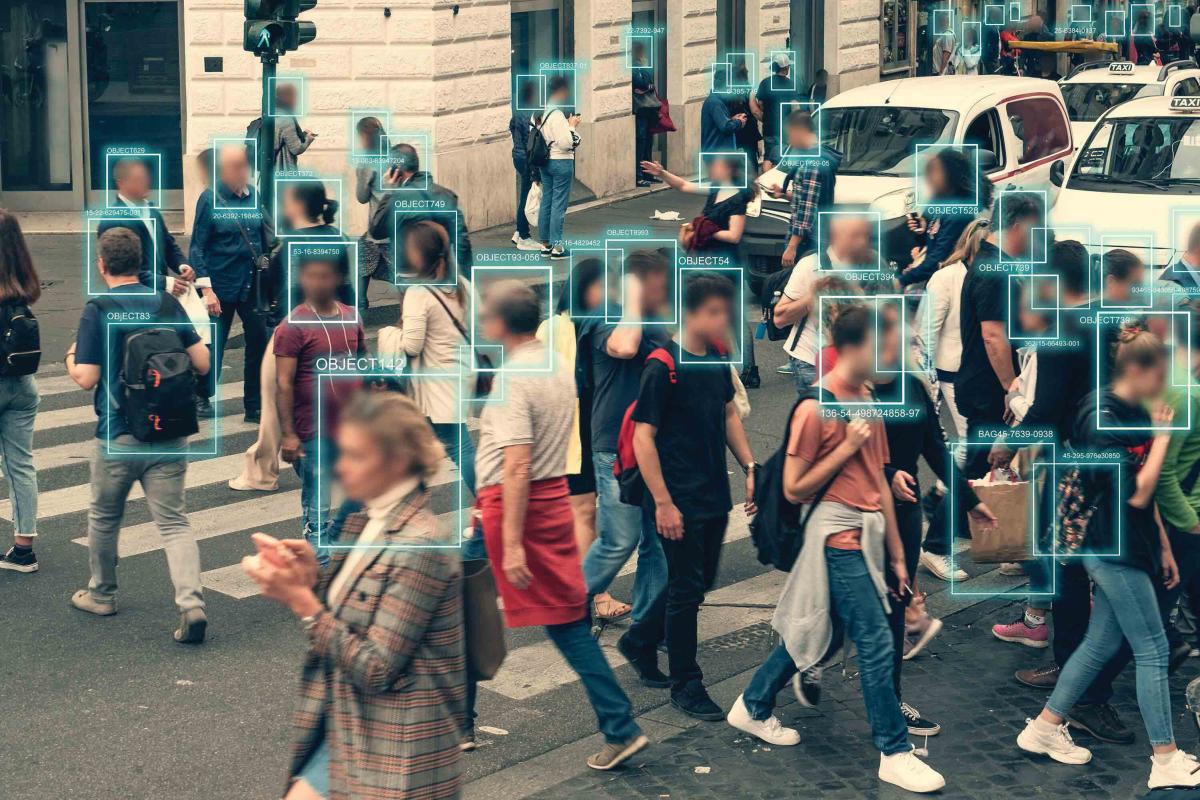

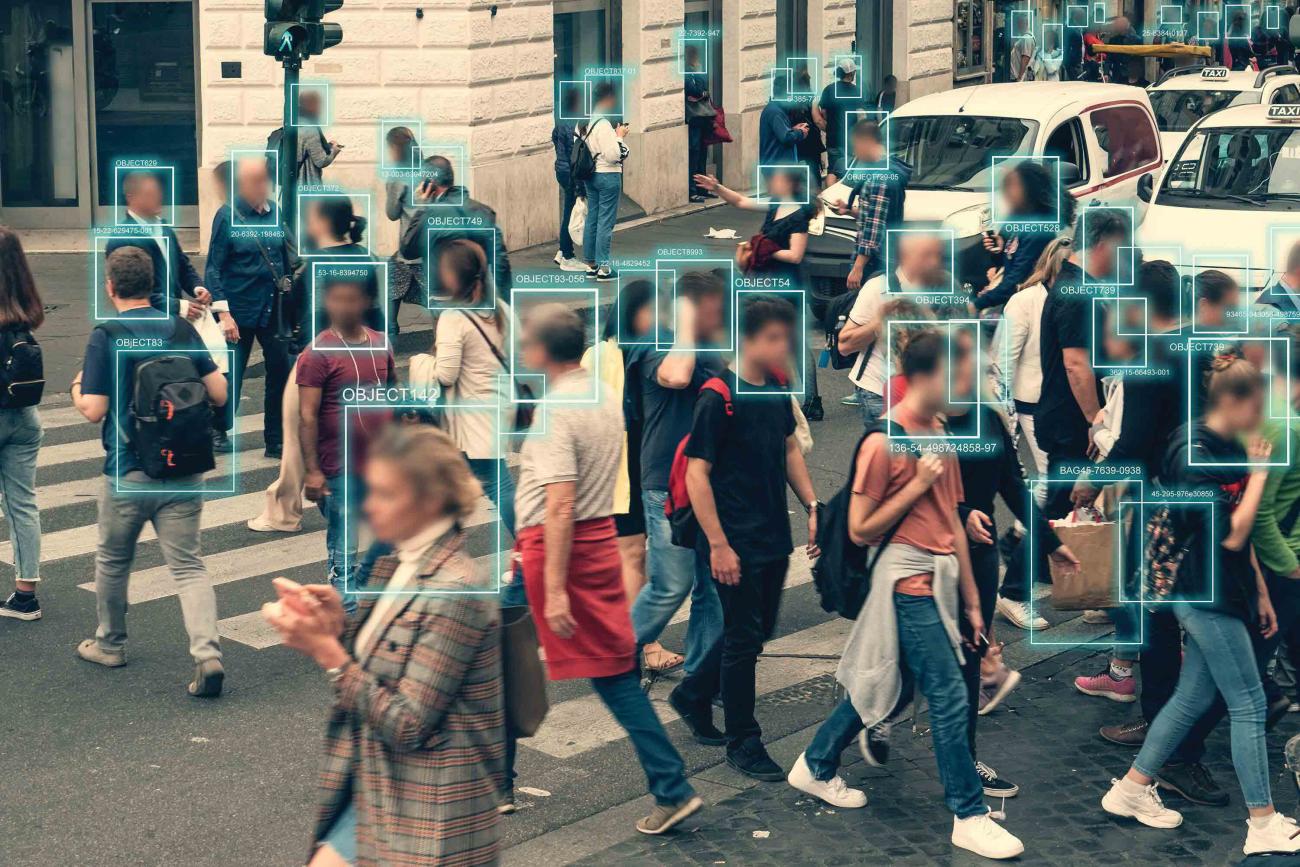

Surveillance Capitalism:

Algorithms also convert individual experience into data, the most valuable global commodity. In The Age of Surveillance Capitalism, Harvard Business School professor emerita Shoshana Zuboff argues that when we use a service such as a search engine or a social media platform, it extracts personal data so that more of our experiences are tracked and captured as “surplus value” for better predictions about us. Google algorithms pioneered surveillance capitalism through monetizing search data for targeted advertising.

Just as industrial capitalism relies on the use, if not exploitation, of labor for capital accumulation, surveillance capital is tied to the data “extraction imperative” (to quote Zuboff again) that exploits human freedom and dignity for more quantities and qualities of data to feed machine learning. Google receives 3.5 billion search inquiries daily, which means it collects 3.5 billion daily data points. But Google’s data extraction does not stop at searches. In the past, Google has read emails in Gmail and hacked Wi-Fi routers through its Street View cars. Today, Google gains access to phone applications on its Android mobile operating software and constantly monitors Google Home.

Facebook followed the Google playbook. Today, Facebook algorithms generate more than 6 million predictions per second for 2.8 billion users. Its tracking extends to even those without a Facebook account by employing “like” and “share” buttons as trackers on more than 10 million websites (including more than one third of the 1,000 most-visited websites). Facebook’s DeepFace is one of the largest facial datasets in the world. In 2018, Facebook introduced Portal, a smart home display whose AI-enabled camera tracks users automatically as they move about a room while its microphone constantly listens for a “wake word” (“Hey, Portal”). Facebook (like Amazon and Google) acknowledges that it reviews audio recordings before the wake word is activated to improve the service. In The Four, New York University professor Scott Galloway warns that through constant tracking on platforms, websites, phones, and homes, Facebook “registers a detailed—and highly accurate—portrait from our clicks, words, movements, and friend networks,” whether or not users are logged on.

Information Pollution:

As we turn to Google and Facebook for information and connection, they turn to us for creating unique algorithmically generated content. Facebook’s algorithms curate information visibility and ordering on a user’s news feed out of thousands of potential posts. Google-owned YouTube’s algorithms select a few recommended videos out of millions. This content curation is largely driven by capturing user attention with “clicks” without regard for quality. Siva Vaidhyanathan, director of the Center for Media and Citizenship at the University of Virginia, writes in Antisocial Media that “information pollution” consists of privileging false or misleading information, information eliciting strong emotions, and information that contributes to “echo chambers of reinforced beliefs,” all of which make “it harder for diverse groups of people to gather to conduct calm, informed, productive conversations.” Information pollution is also leveraged by governments for repression. On this basis, Amnesty International released a report in 2019 stating that Google and Facebook undermine human rights.

Algorithmic Opacity:

While algorithms aim to govern us, most of us do not understand algorithms. This asymmetry leads sociolegal scholar Frank Pasquale to observe in The Black Box Society that “the contemporary world more closely resembles a one-way mirror.” Unsupervised algorithms operating in complex, deep-learning machine structures are especially opaque because they have the ability to identify patterns in ways that may supersede human cognition. Without clarity on how unsupervised machines learn, it is difficult to assess the appropriateness of algorithmic inferences.

In supervised AI systems, there are already distinctions between “human-in-the-loop” with full human command, “human-on-the-loop” with possible human override, and “human-out-of-the-loop” with no human oversight. As people and institutions grow ever more comfortable relying on unsupervised algorithms, the very meaning of human command and control is in doubt.

Responsible AI:

Because algorithms are so influential and so opaque, more observers are calling for responsible artificial intelligence. Political theorist Colin Koopman warns, “We who equip ourselves with algorithms dispose ourselves to become what the algorithms say about us.” AI philosophers Herman Cappelen and Josh Dever center responsibility as a critical challenge: “We don’t want to live in a world in which we are imprisoned for reasons we can’t understand, subject to invasive medical conditions for reasons we can’t understand, told whom to marry and when to have children for reasons we can’t understand.”

In 2016, the U.S. Defense Advanced Research Projects Agency launched an explainable AI challenge, asking researchers to develop new AI systems that “will have the ability to explain their rationale, characterize their strengths and weaknesses, and convey an understanding of how they will behave in the future.” In Europe, the 2018 General Data Protection Regulation identified a “right to explanation” for algorithmic decision-making.

Computer scientists have responded to regulators and critics by developing some visualization methods to figure out how self-learning algorithms obtain their results. Others have focused on more disclosures and audits. In 2018, former Google engineer and ethicist Timnit Gebru, along with collaborators, proposed “datasheets for datasets,” where “every dataset be accompanied with a datasheet that documents its motivation, composition, collection process, recommended uses, and so on.” But theorists in critical algorithm studies counter that disclosing decision rules or source code will not, by itself, address the knowledge gap between algorithm designers and the public.